Some TweetSharp, Accord.Net and the author's code = Machine Learning to detect hacked tweets...

Primary Objects - Detecting a Hacked Tweet with Machine Learning

Introduction

This article is part of a presentation for The Associated Press, 2013 Technology Summit.

On April 23, 2013 the stock market experienced one of its biggest flash-crash drops of the year, with the Dow Jones industrial average falling 143 points (over 1%) in a matter of minutes. Unlike the 2012 stock market blip, this one wasn't caused by an individual trade, but rather by a single tweet from the Associated Press (AP) account on the social network, Twitter. The tweet, of course, wasn't written by AP, but rather by an imposter who had temporarily gained control of the account. Considering the impact of real-time messaging services, such as Twitter, could it be possible to detect the tweet as hacked?

In this article, we'll discuss how to use machine learning and so-called "big data" analysis to mine large amounts of information and classify meaningful relationships from them. In particular, we'll walk through a prototype machine learning example that attempts to classify tweets as having been authored by AP or not. We'll examine learning curves to see how they help validate machine learning algorithms and models. As a final test, we'll run the program on the hacked tweet and see if it's able to successfully classify the tweet as being authentic or hacked.

...

Why, Hello There, Twitter

The foremost important part of a machine learning solution is the amount and quality of data to base learning upon. To classify AP's tweets by authorship, we'll need to extract tweets from AP's Twitter account history to serve as the positive cases. We'll also need a collection of non-AP tweets to serve as the negative cases.

To aid in the collection of tweets, the C# .NET library TweetSharp was used. Queries were initially prepared to extract AP tweets, using the search term "from:AP", and later refined to include date ranges.

...

The results from TweetSharp are then saved to a CSV format file, using the C# .NET library CsvHelper.

While TweetSharp worked quite well for extracting a limited history of tweets, the API is apparently limited by how far back in time tweets may be extracted from. This would leave us with about 1,100 data examples to train on. For a more optimal scenario, we could use a lot more data. Note, initial trainings on this minimal data-set actually achieved 94% accuracy, although the learning charts indicated a higher accuracy could be achieved with more data.

...

Digitizing Tweets

To allow the machine learning algorithm to process the tweets, each tweet will need to be converted into a numerical format. There are a couple of different methods for doing this, such as TF*IDF, but the optimal method appeared to be word indexing.

First, the collection of tweets was separated into two portions: the training set, and the cross validation (CV) set. The training set would be used for all learning-based examples, while the CV set would be used for calculating accuracy scores.

A vocabulary was built off of the training set by tokenizing the text of the tweets and then using the porter-stemmer algorithm (Centivus.EnglishStemmer.dll) to obtain the collection of base distinct words.

We then digitize each tweet in the training set to an array of ints, corresponding to the word existing in the vocabulary. For each tweet, we check each word in the vocabulary and see if it exists in the current tweet. ...

...

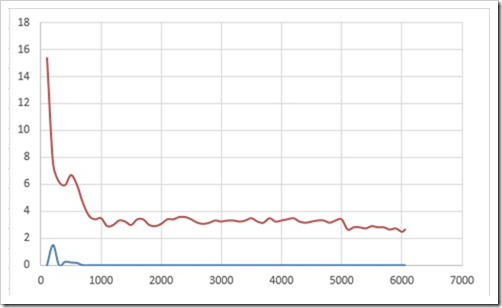

Proof That We're Learning Something

Learning curves are an excellent way for telling if a machine learning algorithm is actually learning. By plotting the accuracy against the number of training set items, it becomes apparent whether the algorithm is learning as data examples grow, and if adding more data will actually help or hinder accuracy.

For machine learning algorithms in C# .NET, the Accord .NET library was used.

...

Results?

The best algorithm was trained on 6,054 tweets. Roughly half were authored by AP, and the rest were authored by other users.The program achieved a final accuracy of 100% Training, 97.38% CV, 96.23% Test. Judging by the learning curve, it looks like there is still some room to go even further, by providing more training examples.

Here is a view of the resulting program running on real live data (test set). The program never saw these tweets before in its whole life. Honest!..."

There's some great code and ideas here, but best of all the author makes the concepts understandable and relevant...

No comments:

Post a Comment